Issue #301 - The ML Engineer 🤖

State of Prod ML: 2024 Survey, Learnings from 100k A/B Tests, Pragmatic ML Eng, Stanford's ML System Seminars, PolaRS GPU + more 🚀

Even it's already been a week, we continue celebrate together our 300th Issue 🚀🚀🚀 This is a HUGE milestone we want to celebrate with YOU! As part of this we are launching a survey on The State of Production ML, and your contribution would make a significant difference to the whole ML ecosystem ⭐

This week in Machine Learning:

State of Prod ML: 2024 Survey

Learnings from 100k A/B Tests

Pragmatic Machine Learning Eng

Stanford's ML System Seminars

PolaRS GPU Accel DataFrames

Open Source ML Frameworks

Awesome AI Guidelines to check out this week

+ more 🚀

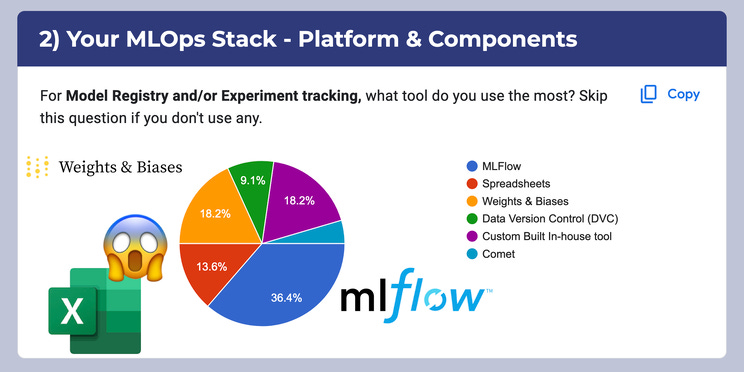

In Machine Learning, Spreadsheets just keep coming back - this time as one of the top choices for experiment tracking and model registry tools - only a week since we launched our survey on the State of Production ML we are already seeing great insights! We have designed the questions to provide meaningful insights on the current landscape of production ML in 2024 - if you have a chance we would be grateful if you could spend a few minutes on the survey, as you'll contribute valuable information about the machine learning tools and platforms you use in your production ML development. Your input will help create a comprehensive overview of common practices, tooling preferences, and challenges faced when deploying models to production, ultimately benefiting the entire ML community 🚀

What do you learn from running 100,000 A/B tests? Despite the disproportionately larger A/B-testing culture at billion-user scale, companies like Amazon and Meta still face significant challenges in large-scale experimentation, and this is resource captures some of these challenges. Some of the key issues that these type of organisations face include information overload from numerous concurrent experiments, diminishing returns on the approaches to extract causal insights, infrastructural limitations affecting scalability, and cultural differences in experimentation practices. This is a great insight for organisations that are building their experimentation practices, although we have to remember that only a small subset of companies have the scale of some of these tech giants.

Pragmatic Machine Learning Eng

Free Online Book: The Pragmatic Programmer for Machine Learning Engineering, A Practical Deep Dive. This is a great resource on the role of software engineering in developing robust, efficient, and maintainable machine learning systems for production. The book bridges the gap between machine learning and software engineering by offering best practices for designing, coding, deploying, documenting, and testing machine learning pipelines, and provides key lessons on recognizing that poor software practices can lead to technical debt, reproducibility issues, and costly failures.

Stanford has been publishing seminar videos on the frontier of machine learning systems, covering key concepts around challenges and solutions in AI research and industry in conversation with thought leaders in the space. This is quite a great video series as it features expert speakers from academia and industry, the series covers a wide range of topics including programming ML systems with frameworks like JAX, data labeling with tools like Snorkel, deploying robust models, hyperparameter optimization, and end-to-end ML pipelines.

Exciting to continue seeing developments in GPGPU with PolaRS (aka Pandas in Rust) which has integrated GPU acceleration into its Python library. It's quite interesting to see the initial release leveraging NVIDIA RAPIDS' cuDF which provides production machine learning practitioners up to 13x speed improvements on compute-intensive data processing tasks like joins and GROUP BYs. It will certainly be an exciting space to keep an eye on - indeed it is exciting for the opportunity for leveraging Vulkan-based GPGPU backends for support across 1000s of GPU cards, such as with our GPU acceleration framework Vulkan Kompute.

Upcoming MLOps Events

The MLOps ecosystem continues to grow at break-neck speeds, making it ever harder for us as practitioners to stay up to date with relevant developments. A fantsatic way to keep on-top of relevant resources is through the great community and events that the MLOps and Production ML ecosystem offers. This is the reason why we have started curating a list of upcoming events in the space, which are outlined below.

Upcoming conferences where we're speaking:

TUM AI@WORK 10th October @ Germany

Other upcoming MLOps conferences in 2024:

ODSC West - 29th October @ USA

Data & AI Summit - 10th June @ USA

AI Summit London - 12th June @ UK

World Summit AI - 9th October @ Neatherlands

MLOps World - 8th November @ USA

In case you missed our talks:

The State of AI in 2024 - WeAreDevelopers 2024

Responsible AI Workshop Keynote - NeurIPS 2021

Practical Guide to ML Explainability - PyCon London

ML Monitoring: Outliers, Drift, XAI - PyCon Keynote

Metadata for E2E MLOps - Kubecon NA 2022

ML Performance Evaluation at Scale - KubeCon Eur 2021

Industry Strength LLMs - PyData Global 2022

ML Security Workshop Keynote - NeurIPS 2022

Open Source MLOps Tools

Check out the fast-growing ecosystem of production ML tools & frameworks at the github repository which has reached over 10,000 ⭐ github stars. We are currently looking for more libraries to add - if you know of any that are not listed, please let us know or feel free to add a PR. Four featured libraries in the GPU acceleration space are outlined below.

Kompute - Blazing fast, lightweight and mobile phone-enabled GPU compute framework optimized for advanced data processing usecases.

CuPy - An implementation of NumPy-compatible multi-dimensional array on CUDA. CuPy consists of the core multi-dimensional array class, cupy.ndarray, and many functions on it.

Jax - Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

CuDF - Built based on the Apache Arrow columnar memory format, cuDF is a GPU DataFrame library for loading, joining, aggregating, filtering, and otherwise manipulating data.

If you know of any open source and open community events that are not listed do give us a heads up so we can add them!

OSS: Policy & Guidelines

As AI systems become more prevalent in society, we face bigger and tougher societal challenges. We have seen a large number of resources that aim to takle these challenges in the form of AI Guidelines, Principles, Ethics Frameworks, etc, however there are so many resources it is hard to navigate. Because of this we started an Open Source initiative that aims to map the ecosystem to make it simpler to navigate. You can find multiple principles in the repo - some examples include the following

MLSecOps Top 10 Vulnerabilities - This is an initiative that aims to further the field of machine learning security by identifying the top 10 most common vulnerabiliites in the machine learning lifecycle as well as best practices.

AI & Machine Learning 8 principles for Responsible ML - The Institute for Ethical AI & Machine Learning has put together 8 principles for responsible machine learning that are to be adopted by individuals and delivery teams designing, building and operating machine learning systems.

An Evaluation of Guidelines - The Ethics of Ethics; A research paper that analyses multiple Ethics principles.

ACM's Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018.

If you know of any guidelines that are not in the "Awesome AI Guidelines" list, please do give us a heads up or feel free to add a pull request!

About us

The Institute for Ethical AI & Machine Learning is a European research centre that carries out world-class research into responsible machine learning.