Issue #365 - The ML Engineer 🤖

MLOps 2015-2035 Past and Future, Andrew Ng AI Career Advice, The State of MLOps 2025 Survey 🔥, ZenML 1000+ Case Studies LLMOps, Top Python Libraries in 2025 + more 🚀

The results for the Survey on Production MLOps are out: ethical.institute/state-of-ml-2025 🚀🚀🚀 As part of the release we have updated the interface enabling real time toggling between 2024 and 2025 data, and have refreshed a cool new code-editor theme 😎 Check it out and share it around!!

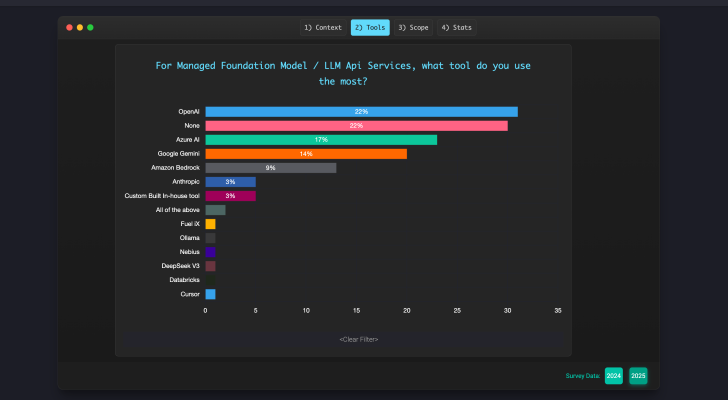

Further insights: The results are in for 2025 GenAI providers in Production ML, and the data reflects what we saw this year: OpenAI losing its moat; Gemini skyrocketing; tooling standardisation; etc. Here are the key insights 👇

Open AI climbs to the top most used provider with 22%; however dropping from 24% in 2024 which reflects the struggle to keep the market-share.

Last year “No API” was the top choice, falling now to second choice with 22% (vs 38% in 2024) as LLMs eat the world and adoption increases.

Surprisingly Azure AI continues strong as Top 3 with 17% (vs 12% in 2024) due to close partnership with OpenAI / Github / etc.

Google Gemini has the largest jump with 14%, up from 3% in 2024 which is clear that Google is taking over significant market-share.

Anthropic stays consistent this year with 3% (vs 3% in 2024); I expect this one to grow next year with increased claude adoption.

Organisations are also moving away from building custom in-house tooling, down to 3% (vs 5% in 2024), which means consolidating towards providers.

If you want to dive deeper you can access the full results here: https://ethical.institute/state-of-ml-2025 🔥

This week in ML Engineering:

Andrew Ng AI Career Advice

The State of MLOps 2025 Survey 🔥

ZenML 1000+ Case Studies LLMOps

Top Python Libraries in 2025

Open Source ML Frameworks

Awesome AI Guidelines to check out this week

+ more 🚀

MLOps 2015-2035; The Past and Future

My keynote on a journey to the past and the future of Prod ML (2015-2035) is out!! I really enjoyed this one, as I had to reflect across my last decade in MLOps, as well as making predictions for the next decade. Would love to get thoughts! The topic of “Great MLOps” is now more important than ever as we are starting to see AI being rolled out at even more critical infrastructure, so we need to focus on turning models into reliable and scalable production systems. On the talk I look back at the last decade and categorise it as the Genesis of MLOps in 2015 (of course from Google’s paper), then going through a period of Messy Innovation between 2018-2022 with an explosion of tools which was accelerated by COVID, and then further accelerating with the growth of LLMOps in 2023–2025. The journey so far has been really fun, but unfortunately we have only scratched the surface; back in 2015 I would have put my money that we’d be much farther ahead in key areas that are still not fully mature such as ML Monitoring or ML Serving at scale. I also really enjoyed putting together a few predictions for the next decade; I tried to be relatively conservative but looking back I am still not sure if we’ll be there in a decade - this is why I chuckle when someone proposes AGI is coming soon... There is a lot to digest here, but would love to get further thoughts as I will be looking to iterate on this content throughout the year to come!

When Andrew Ng and Laurence Moroney give career advice, we listen. This is really grounded guidance as it provides a snapshot on the current state of the (tough) hiring market, together with tips to increase your chances. There’s also some good reminders that AI product-building is accelerating, with AI coding tools make it possible to ship more powerful software far faster, so a differentiator for production ML practitioners is no longer just implementing models but choosing what to build, writing clear specs, iterating with users, and staying current on tooling. It is also a reminder to approach vibe coding conscientiously, as otherwise it can backfire - however using it correctly can help augment skills and breadth of knowledge. Check it out!

The State of MLOps 2025 Survey 🔥

The results are in for 2025 GenAI providers in Production ML, and the data reflects what we saw this year: OpenAI losing its moat; Gemini skyrocketing; tooling standardisation; etc. Here are the key insights 👇

Open AI climbs to the top most used provider with 22%; however dropping from 24% in 2024 which reflects the struggle to keep the market-share.

Last year “No API” was the top choice, falling now to second choice with 22% (vs 38% in 2024) as LLMs eat the world and adoption increases.

Surprisingly Azure AI continues strong as Top 3 with 17% (vs 12% in 2024) due to close partnership with OpenAI / Github / etc.

Google Gemini has the largest jump with 14%, up from 3% in 2024 which is clear that Google is taking over significant market-share.

Anthropic stays consistent this year with 3% (vs 3% in 2024); I expect this one to grow next year with increased claude adoption.

Organisations are also moving away from building custom in-house tooling, down to 3% (vs 5% in 2024), which means consolidating towards providers.

If you want to dive deeper you can access the full results here: https://ethical.institute/state-of-ml-2025 🔥

Out of the blue the ZenML team dropped a report on 1000+ case studies in LLMOps - check it out! Some key highlights: 1) We are moving away from proof-of-concepts towards real-world use-cases hitting production. 2) We are seeing trends across tight cost/latency control, durable orchestration for long-running agent workflows, observability, circuit breakers, and progressive autonomy with human handoffs. 3) One of the bigger technical shifts are transitioning from prompt engineering to context engineering. 4) Evals and guardrails are still maturing but becoming now standard requirements for production use-cases. 5) MCP is quietly stabilizing as one of the standards (+ the move to LF is quite promising) but still lacks foundations like security, etc. Really great initiative from the ZenML team, check out the report and dive into the database of case studies as well.

Check out the Top 10 Python Libraries in 2025! Here’s the list for both AI tools, as well as general tools:

Top 10 General Python Libraries:

ty - a blazing-fast type checker built in Rust.

complexipy - measures how hard it is to understand the code.

Kreuzberg - extracts data from 50+ file formats.

throttled-py - control request rates with five algorithms.

httptap - timing HTTP requests with waterfall views.

fastapi-guard - security middleware for FastAPI apps.

modshim - seamlessly enhance modules without monkey-patching.

Spec Kit - executable specs that generate working code.

skylos - detects dead code and security vulnerabilities.

FastOpenAPI - easy OpenAPI docs for any framework.

Top 10 AI Python Libraries:

MCP Python SDK & FastMCP - connect LLMs to external data sources.

Token-Oriented Object Notation (TOON) - compact JSON encoding for LLMs.

Deep Agents - framework for building sophisticated LLM agents.

smolagents - agent framework that executes actions as code.

LlamaIndex Workflows - building complex AI workflows with ease.

Batchata - unified batch processing for AI providers.

MarkItDown - convert any file to clean Markdown.

Data Formulator - AI-powered data exploration through natural language.

LangExtract - extract key details from any document.

GeoAI - bridging AI and geospatial data analysis

Upcoming MLOps Events

The MLOps ecosystem continues to grow at break-neck speeds, making it ever harder for us as practitioners to stay up to date with relevant developments. A fantsatic way to keep on-top of relevant resources is through the great community and events that the MLOps and Production ML ecosystem offers. This is the reason why we have started curating a list of upcoming events in the space, which are outlined below.

Conferences for 2026 are coming soon! For the meantime, in case you missed our talks:

The State of AI in 2025 - WeAreDevelopers 2025

Prod Generative AI in 2024 - KubeCon AI Day 2025

The State of AI in 2024 - WeAreDevelopers 2024

Responsible AI Workshop Keynote - NeurIPS 2021

Practical Guide to ML Explainability - PyCon London

ML Monitoring: Outliers, Drift, XAI - PyCon Keynote

Metadata for E2E MLOps - Kubecon NA 2022

ML Performance Evaluation at Scale - KubeCon Eur 2021

Industry Strength LLMs - PyData Global 2022

ML Security Workshop Keynote - NeurIPS 2022

Open Source MLOps Tools

Check out the fast-growing ecosystem of production ML tools & frameworks at the github repository which has reached over 10,000 ⭐ github stars. We are currently looking for more libraries to add - if you know of any that are not listed, please let us know or feel free to add a PR. Four featured libraries in the GPU acceleration space are outlined below.

Kompute - Blazing fast, lightweight and mobile phone-enabled GPU compute framework optimized for advanced data processing usecases.

CuPy - An implementation of NumPy-compatible multi-dimensional array on CUDA. CuPy consists of the core multi-dimensional array class, cupy.ndarray, and many functions on it.

Jax - Composable transformations of Python+NumPy programs: differentiate, vectorize, JIT to GPU/TPU, and more

CuDF - Built based on the Apache Arrow columnar memory format, cuDF is a GPU DataFrame library for loading, joining, aggregating, filtering, and otherwise manipulating data.

If you know of any open source and open community events that are not listed do give us a heads up so we can add them!

OSS: Policy & Guidelines

As AI systems become more prevalent in society, we face bigger and tougher societal challenges. We have seen a large number of resources that aim to takle these challenges in the form of AI Guidelines, Principles, Ethics Frameworks, etc, however there are so many resources it is hard to navigate. Because of this we started an Open Source initiative that aims to map the ecosystem to make it simpler to navigate. You can find multiple principles in the repo - some examples include the following:

MLSecOps Top 10 Vulnerabilities - This is an initiative that aims to further the field of machine learning security by identifying the top 10 most common vulnerabiliites in the machine learning lifecycle as well as best practices.

AI & Machine Learning 8 principles for Responsible ML - The Institute for Ethical AI & Machine Learning has put together 8 principles for responsible machine learning that are to be adopted by individuals and delivery teams designing, building and operating machine learning systems.

An Evaluation of Guidelines - The Ethics of Ethics; A research paper that analyses multiple Ethics principles.

ACM’s Code of Ethics and Professional Conduct - This is the code of ethics that has been put together in 1992 by the Association for Computer Machinery and updated in 2018.

If you know of any guidelines that are not in the “Awesome AI Guidelines” list, please do give us a heads up or feel free to add a pull request!

About us

The Institute for Ethical AI & Machine Learning is a European research centre that carries out world-class research into responsible machine learning.